This is an old revision of the document!

Table of Contents

https://www.cs.toronto.edu/~frossard/post/vgg16/

Objective:

To explore an alternative use of DNNs by implementing the style transfer algorithm.

Deliverable:

For this lab, you will need to implement the style transfer algorithm of Gatys et al.

- You must extract statistics from the content and style images

- You must formulate an optimization problem over an input image

- You must optimize the image to match both style and content

You should turn in the following:

- The final image that you generated

- Your code

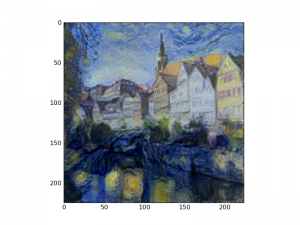

An example image that I generated is shown at the right.

Grading standards:

Your notebook will be graded on the following:

- 35% Correct implementation of Siamese network

- 35% Correct implementation of Resnet

- 20% Reasonable effort to find a good-performing topology

- 10% Results writeup

Description:

For this lab, you should implement the style transfer algorithm referenced above. We are providing the following:

In the scaffolding code, you will find some examples of how to use the provided VGG model. (This model is a slightly modified version of code available here).

Note: In class, we discussed how to construct a computation graph that reuses the VGG network 3 times (one for content, style, and optimization images). It turns out that you don't need to do that. In fact, we merely need to evaluate the VGG network on the content and style images, and save the resulting activations.

The steps for completion of this lab are:

- Load all of the data. Create a test/training split.

- Establish a baseline accuracy (ie, if you randomly predict same/different, what accuracy do you achieve?)

- Use tensorflow to create your siamese network.

- Use ResNets to extract features from the images

- Make sure that parameters are shared across both halves of the network!

- Train the network using an optimizer of your choice

- You should use some sort of SGD.

- You will need to sample same/different pairs.

Note: you will NOT be graded on the accuracy of your final classifier, as long as you make a good faith effort to come up with something that performs reasonably well.

Your ResNet should extract a vector of features from each image. Those feature vectors should then be compared to calculate an “energy”; that energy should then be input into a contrastive loss function, as discussed in class.

Remember that your network should be symmetric, so if you swap input images, nothing should change.

Note that some people in the database only have one image. These images are still useful, however (why?), so don't just throw them away.

Writeup:

As discussed in the “Deliverable” section, your writeup must include the following:

- A description of your test/training split

- A description of your resnet architecture (layers, strides, nonlinearities, etc.)

- How you assessed whether or not your architecture was working

- The final performance of your classifier

This writeup should be small - less than 1 page. You don't need to wax eloquent.

Hints:

To help you get started, here's a simple script that will load all of the images and calculate labels. It assumes that the face database has been unpacked in the current directory, and that there exists a file called list.txt that was generated with the following command:

find ./lfw2/ -name \*.jpg > list.txt

After running this code, the data will in the data tensor, and the labels will be in the labels tensor:

from PIL import Image import numpy as np # # assumes list.txt is a list of filenames, formatted as # # ./lfw2//Aaron_Eckhart/Aaron_Eckhart_0001.jpg # ./lfw2//Aaron_Guiel/Aaron_Guiel_0001.jpg # ... # files = open( './list.txt' ).readlines() data = np.zeros(( len(files), 250, 250 )) labels = np.zeros(( len(files), 1 )) # a little hash map mapping subjects to IDs ids = {} scnt = 0 # load in all of our images ind = 0 for fn in files: subject = fn.split('/')[3] if not ids.has_key( subject ): ids[ subject ] = scnt scnt += 1 label = ids[ subject ] data[ ind, :, : ] = np.array( Image.open( fn.rstrip() ) ) labels[ ind ] = label ind += 1 # data is (13233, 250, 250) # labels is (13233, 1)