This is an old revision of the document!

Table of Contents

Objective:

To explore an alternative use of DNNs by implementing the style transfer algorithm. To understand the importance of a complex loss function. To see how we can optimize not only over network parameters, but over other objects (such as images) as well.

Deliverable:

For this lab, you will need to implement the style transfer algorithm of Gatys et al.

- You must formulate an optimization problem over an input image

- You must optimize the image to match both style and content

In your jupyter notebook, you should turn in the following:

- The final image that you generated

- Your code

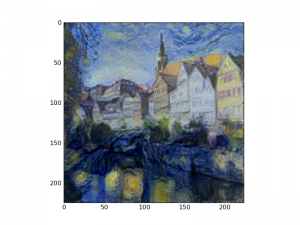

An example image that I generated is shown at the right.

Grading standards:

Your code will be graded on the following:

- 35% Correct extraction of statistics

- 35% Correct construction of cost function

- 20% Correct initialization and optimization of image variable

- 10% Awesome looking final image

Description:

For this lab, you should implement the style transfer algorithm referenced above. To do this, you will need to unpack the given images. Since we want you to focus on implementing the paper and the loss function, we will give you the code for this.

from PIL import Image import io from google.colab import files load_and_normalize = transforms.Compose([ transforms.ToPILImage(), transforms.Resize((224,224)), transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) ]) print('Upload Content Image') file_dict = files.upload() content_image = Image.open(io.BytesIO(file_dict[next(iter(file_dict))])) content_image = load_and_normalize(np.array(content_image)).unsqueeze(0) print('\nUpload Style Image') file_dict = files.upload() style_image = Image.open(io.BytesIO(file_dict[next(iter(file_dict))])) style_image = load_and_normalize(np.array(style_image)).unsqueeze(0)

Or after the images are uploaded on to the local filesystem, you can use:

style_image = Image.open("style_image.png")

style_image = load_and_normalize(np.array(style_image)).unsqueeze(0)

content_image = Image.open("content_image.png")

content_image = load_and_normalize(np.array(content_image)).unsqueeze(0)

For reference on the network, we will give you a pytorch implementation to look at and to implement. This should not just be copy-pasted but you should implement the steps included in the paper–this has more intuitive notation and does a good job explaining what each step does.

Additionally, in the paper it talks about the VGG network. For the paper, they use the VGG16 network and we will do the same. You do NOT need to implement this! PyTorch has a VGG16 model in one of its libraries. Here we will show you how to access it.

import torchvision.models as models vgg = models.vgg16(pretrained=True)

However, accessing the the model is difficult, so you can access the layers by using the VGG Intermediate class we have created for you. This adds a post-hook to the model so that after every layer it writes the output of the layers we care about to a dictionary. This creates a dictionary of the layers that you request's output activation layers.

class VGGIntermediate(nn.Module):

def __init__(self, requested=[]):

super(VGGIntermediate, self).__init__()

self.intermediates = {}

self.vgg = models.vgg16(pretrained=True).features.eval()

for i, m in enumerate(self.vgg.children()):

if i in requested:

def curry(i):

def hook(module, input, output):

self.intermediates[i] = output

return hook

m.register_forward_hook(curry(i))

def forward(self, x):

self.vgg(x)

return self.intermediates

To view all the layers in the network, you can print (VGGIntermediate(requested=requested_vals)). These requested values will be the integer representations in list form of the layer numbers you are trying to access (you can find these by hand using the list below or preferably using list comprehensions). This is how the layer list came from, but we have included that below. To access the dictionary of the activation layers, you can use:

vgg = VGGIntermediate(requested=requested_vals) vgg.cuda() layer = vgg(<image_name>.cuda()) print (layer.keys()) output_activation = layer[requested_vals[<i>]]

This will set your model so that the activation layers are avaliable by using the dictionary key that corresponds to the location of the layer in the VGG network that you're using. (Note: this means that the output_activation on this example should be the conv1_1 activation layer).

Additionally, for help with understanding the VGG16 model, here is a list of the layers that are contained in the vgg16 model. This should help you know which to index when you're trying to access the activation layers specified in the Gatys et al. paper.

vgg_names = ["conv1_1", "relu1_1", "conv1_2", "relu1_2", "maxpool1", "conv2_1", "relu2_1", "conv2_2", "relu2_2", "maxpool2", "conv3_1", "relu3_1", "conv3_2", "relu3_2", "conv3_3", "relu3_3","maxpool3", "conv4_1", "relu4_1", "conv4_2", "relu4_2", "conv4_3", "relu4_3","maxpool4", "conv5_1", "relu5_1", "conv5_2", "relu5_2", "conv5_3", "relu5_3","maxpool5"]

Note: In class, we discussed how to construct a computation graph that reuses the VGG network 3 times (one for content, style, and optimization images). It turns out that you don't need to do that. In fact, we merely need to evaluate the VGG network on the content and style images, and save the resulting activations.

The activations can be used to construct a cost function directly. In other words, we don't need to keep around the content/style VGG networks, because we'll never back-propagate through them.

The steps for completion of this lab are:

- Run the VGG network on the content and style images. Save the activations.

- Construct a content loss function, based on the paper

- Construct a style loss function, based on the paper

- For each layer specified in the paper (also noted in the code), you'll need to construct a Gram matrix

- That Gram matrix should match an equivalent Gram matrix computed on the style activations

- Construct an Adam optimizer, step size 0.1

- Initialize your optimization image to be the content image

- Optimize!

Hints:

You should make sure that if you initialize your image to the content image, and your loss function is strictly the content loss, that your loss is 0.0

Bonus:

There's no official extra credit for this lab, but have some fun with it! Try different content and different styles. See if you can get nicer, higher resolution images out of it.

Also, take a look at the vgg16 source code or its paper (the paper is more intuitive. The source code is very abstract). What happens if you swap out max pooling for average pooling?

You are welcome and encouraged to submit any other style transfer photographs you have, as long as you also submit the required image. Show us the awesome results you can generate!