Table of Contents

Objective

The purpose of this lab is to help students understand the basic workflow for tensorflow. It’s composed of five parts: 1. Understand sessions, placeholders, and computation graphs; 2. Understand variables in tensorflow, and be able to train them with a simple delta rule; 3. Understand vectors, matrices and tensors, and be able to translate them into numpy arrays; 4. Understand data importation in python and tensorflow, be able to import a simple csv file and learn its hidden parameters; 5.(extra credit) Understand OOP in python, magic functions, decorator patent, and be able to express a machine learning model in terms of an object oriented language. After this lab, students should understand the basic workflow of building and testing a machine learning model in tensorflow, and be able to leverage on what them have learnt to build any machine learning model after they have sufficient understanding on its inner mechanics. In this lab, we are going to write a simple perceptron for linear regression.

Deliverable

Finish task 1 to 5, zip up all your code and ipython script together with the result of task 4, which is a tuple of 3 numbers (w1, w2, b), then submit that on learning suit. Task 5(extra credit) worth 5% of the total grade of this lab.😊

Grading standards:

Your code will be graded on the following:

- 30% Correct implementation of data generator

- 30% Correct implementation of regression estimator

- 10% Correct implementation of multi values regression estimator

- 10% Fully vectorized code

- 20% Correct estimation of hidden parameters in foo.csv

- +5% Clean factorization of computation graphs into classes

Understand sessions, placeholders, and computation graphs

Key concepts:

Computation graphs: A computation graph is essentially an electric circuit, or you can think of it as a dynamical system if you are a math student. Given that, there are three things we would like to do with it: first, feed it with some inputs; second, measure the readings of its output nodes; third, trigger some operations on occasions for more control on the system.

Sessions: It provides a framework to send and read signals to or from a graph, and it has very similarly syntax as a file stream.

Placeholders: They are the input ports of a graph. Each time we run a computation graph with the goal of triggering an operation or measuring a set of nodes, it’s required to send in the request with an input dictionary, specifying what input values are used to generate the outputs.

Related readings:

https://www.tensorflow.org/get_started/get_started#the_computational_graph https://learningtensorflow.com/lesson4/ https://learningtensorflow.com/Visualisation/ https://www.tensorflow.org/versions/r1.0/get_started/graph_viz

Task 1

Simulate the behavior of sampling noisy data from a regression line on a given range. Your computation graph should mimic the behavior of the following python code

import numpy as np def noisy_line(x, noise): return -6.7 * x + 2 + noise for _ in range(100): x_hat = np.random.uniform(-10, 10) noise_hat = np.random.uniform(-1, 1) print(x_hat, noisy_line(x_hat, noise_hat))

Hints:

1. Don’t try to use function or class at this point, since it may trigger the creation of duplicated operators.

2. You can visualize your graph with three lines of code:

a. Surround your graph with:

with tf.name_scope(“name_of_scope”) as scope:

b. Under your main loop, include:

writer = tf.summary.FileWriter("path_to_folder", sess.graph) writer.close()

c. It’ll save your graph summary to “path_to_folder”

d. Run this command in console:

tensorboard --logdir="path_to_folder"

Understand Variables In Tensorflow

Key Concept:

Variables: Variables are mutable state values in a computation graph, we typically want to setup a computation scheme to learn them with given data. In the language of perceptron, we also call them trainable weights.

Delta rule: Delta rule is one of the most rudimentary learning scheme we have in machine learning. It’s formulated as follow: δw_i=c(t - net) * x_i, where delta w_i stand for the change of weight i, c stand for the learning rate, t stand for the target value, net stand for the sum of all weighted features(in other words, dot products between weights and features), and x_i stands for input features. The intuition is that every time the guess of weights fail to predict the target value of a reference x, the algorithm will make small corrections towards the right direction. If you are familiar with perceptron rule in lab 02, then you may realize that we just change prediction to net in the equation.

Related readings:

https://www.tensorflow.org/programmers_guide/variables https://www.tensorflow.org/get_started/get_started#tftrain_api https://learningtensorflow.com/lesson2/

Task 2

Build a computation graph which allow training for the slope of bias variable. Your code should mimic the functionality of the following python script. Try not to use any build in optimization function in tensorflow to get a feel on what it’s doing underneath.

import numpy as np def noisy_line(x, noise): return -6.7 * x + 2 + noise class Regression: def __init__(self): self.learning_rate = 0.005 self.m = 0.1 self.b = 0.1 def learn(self, datum): x_hat, target = datum self.m += self.delta(x_hat, target) * x_hat self.b += self.delta(x_hat, target) * 1.0 def delta(self, x_hat, target): net = self.m * x_hat + self.b return self.learning_rate * (target - net) regresion_model = Regression() for _ in range(1000): x_hat = np.random.uniform(-10, 10) noise_hat = np.random.uniform(-1, 1) y_hat = noisy_line(x_hat, noise_hat) regresion_model.learn((x_hat, y_hat)) print("I guess the line is: y = {}*x + {}".format(regresion_model.m, regresion_model.b))

Hints:

1. Use tf.assign to update variables on a computation graph, we will use other optimization operators in the future, but they are just fancy version of tf.assign.

2. Use tf.global_variables_initializer() to initialize variables

3. You can run multiple ops in parallel by putting them into a list in sess.run like the following:

r1, r2, r3, ... = sess.run([op1, op2, op3, ...], {ph1:ph1_hat, ph2:ph2_hat, ...})

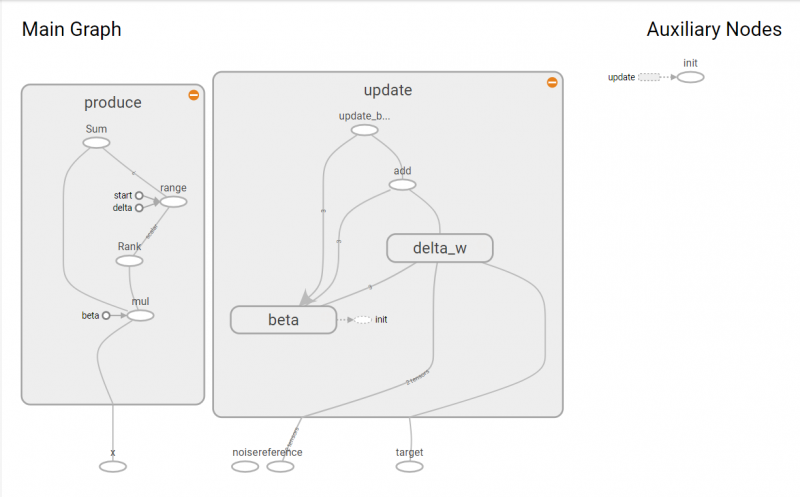

4. My computation graph visualization looks like the following:

Understand vectors, matrices and tensors

Related reading:

https://learningtensorflow.com/lesson3/ https://www.tensorflow.org/get_started/get_started#top_of_page

Task 3

Extent the code you have for learning a simple regression line to allow learning multiple regression weights. Your tensorflow computation graph should mimic the function of the following python script.

import numpy as np def noisy_line(x, noise): assert len(x.shape) == 1 and x.shape[0] == 2 beta = [-2.3, 4.5, 9.4] x = np.append(x, [1]) return np.dot(beta, x) + noise class Regression: def __init__(self): self.learning_rate = 0.005 self.beta = np.zeros(3) def learn(self, datum): x_hat, target = datum x_hat = np.append(x_hat, [1]) self.beta += self.delta_w(x_hat, target) def delta_w(self, x_hat, target): net = np.dot(x_hat, self.beta) # self.m * x_hat + self.b return self.learning_rate * (target - net) * x_hat regresion_model = Regression() for _ in range(1000): x_hat = np.random.uniform(-10, 10, size=(2,)) noise_hat = np.random.uniform(-1, 1) y_hat = noisy_line(x_hat, noise_hat) regresion_model.learn((x_hat, y_hat)) print("I guess beta is: {}".format(regresion_model.beta))

Hints:

1. You can treat tensorflow tensors as numpy arrays most of the time

2. Use tf.reduce_sum to facilitate dot product operation in tensorflow

Understand data importation in python and tensorflow

There isn’t any secret about data importation after we understand what placeholders are in tensorflow. We just need to read data in efficiently enough that it’s not hindering the speed of learning. We will work with images and natural languages later in this semester which will require more advance data pre-processing. However, let’s focus on the basics for now. We have included a link below to introduce saving and retrieving computation graph for those of you who are interested.

Related reading:

https://www.tensorflow.org/api_guides/python/python_io https://www.tensorflow.org/programmers_guide/reading_data

Task 4

Read in the following .csv file and guess the regression line behind the data.

Hints:

1. pandas.read_csv and dataframe.as_matrix will do the magic

Understand OOP in Tensorflow(Extra credit)

Object oriented programming(OOP) is a powerful tool to better structure our code, and using it seamlessly with tensorflow will give us more control on designing new ML algorithms. Though this part of the lab is designed to be an extra credit exercise, it is highly recommended for all CS students to participate. It will only take you at most 30 minutes to finish if you are already familiar with OOP in python.

Key ideas:

Running ops vs creating ops: Every line of code describing some operations in tensorflow is essentially creating nodes and linking them to the rest of a computation graph. Hence putting tensorflow code into functions and calling them for each run will result in duplicated definition of variables. Now, the question we want to answer in this session is that how can we organize our computations into logical block of codes, namely functions and classes in OOP, while avoiding the flaw of mixing up processes of creating and running ops.

magic methods: They are methods of a class with double underscore before and after the definition of its name. The magic behind them is that their invocations are implicitly defined for all objects. For example, if we want to write a constructor for your class, you can simply override the init method.

decorator pattern: A decorator function takes a function and its arguments then extend its behavior without changing the function's implementation. The @annotation in python does exactly that.

@property annotation: While all variables in an object are visible to its users, we might still want to implement getters and setters with special behaviors, for example, bounds checking. The @property allows us to do exactly that.

Related reading:

https://danijar.com/structuring-your-tensorflow-models/ https://www.learnpython.org/en/Classes_and_Objects https://docs.python.org/3/reference/datamodel.html#special-method-names https://www.thecodeship.com/patterns/guide-to-python-function-decorators/ https://www.programiz.com/python-programming/property

Task5

Refactor your code into two classes, each of which represent a computation model of your data generator and your regression learner.

Hints:

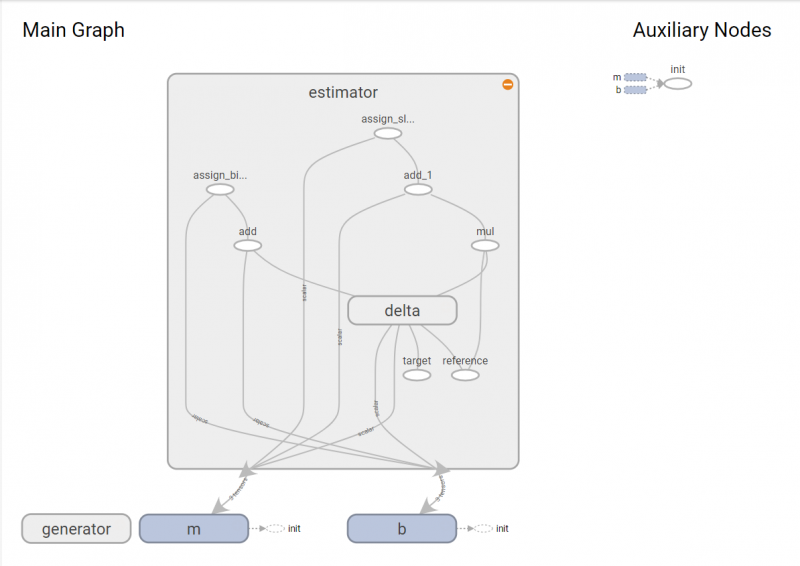

1. I got the following computation graph which are scoped by functions

2. The article by Danijar have shed great insight about this topic. Solutions to the problem should become trivial after reading his article.

3. @functools.wraps(function) can be replaced by @six.wraps(function) for python 2 compatibility after installing and importing the python library “six” in your project environment.